介紹

這篇筆記會記錄如何從 metrics server 開始安裝 Kubernetes Dashboard,並且設定 Istio virtual service / gateway,最後產生一組僅有特定 namespace read-only 權限的 token。

大部分的使用者可以省去安裝 metrics server 這段,因為自 Kubernetes v1.18 開始 metrics server 已是預設元件。

安裝 metrics server

metrics server

1

|

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

|

因為我們 kubelet 身上的 certificate 是自簽的,所以必須在 command 加上 --kubelet-insecure-tls 不然會出現錯誤

1

2

3

|

E0612 02:09:11.062573 1 scraper.go:140] "Failed to scrape node" err="Get \"https://8.8.8.8:10250/metrics/resource\": x509 │

│ : cannot validate certificate for 8.8.8.8 because it doesn't contain any IP SANs" node="ip-8-8-8-8.ap-taiwan-1.compute.i │

│ nternal"

|

安裝 Kubernetes Dashboard

Deploy and Access the Kubernetes Dashboard

1

|

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

|

部署完成後可以使用 sudo kubectl proxy 建立 tunnel 後,在瀏覽器直接訪問 http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/,確認 dashboard 服務是正常的。

接下來,我們必須修改預設的 deployment 與 service,因為 dashboard 預設使用自簽憑證、使用 HTTPS protocol,目前我們這座 K8s cluster 內 app 的流量都是走 tcp/80 HTTP;前端 AWS ALB 也是設定 tcp/443 轉 tcp/80 (回源)

如果不修改 dashboard deployment/svc 的話,就會發生 Client sent an HTTP request to an HTTPS server. (status 400) 錯誤

1

|

kubectl edit deployment -n kubernetes-dashboard kubernetes-dashboard

|

- 在

args 將 --auto-generate-certificates 移除

- 新增

--insecure-bind-address=0.0.0.0

- 新增

--enable-insecure-login

- 修改

containerPort 8443 -> 9090

- 修改

livenessProbe

1

2

3

4

5

|

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 9090

|

1

|

kubectl edit svc -n kubernetes-dashboard kubernetes-dashboard

|

修改完成後,依然可以透過 sudo kubectl proxy 訪問,不過 URL 要改成 http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/

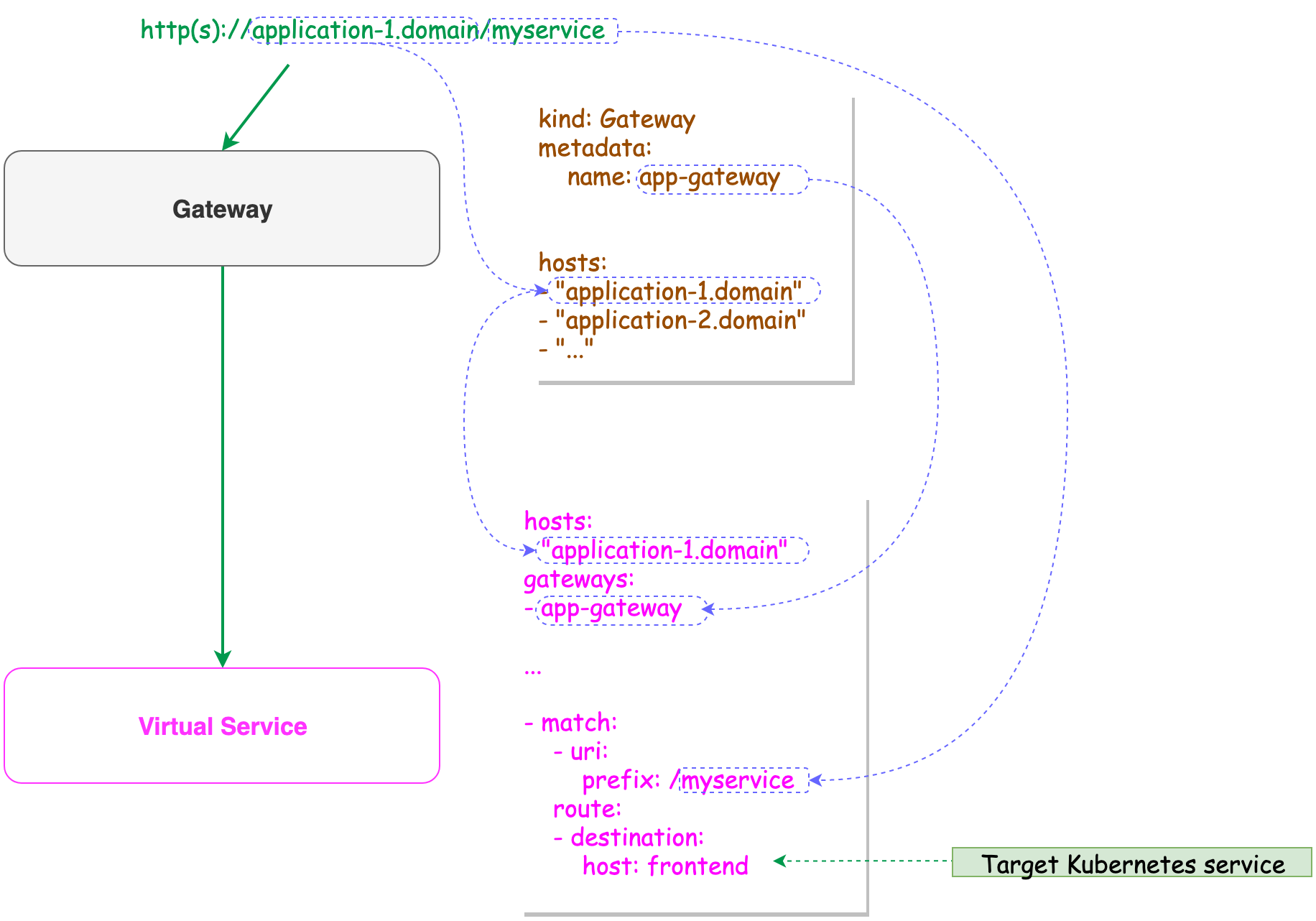

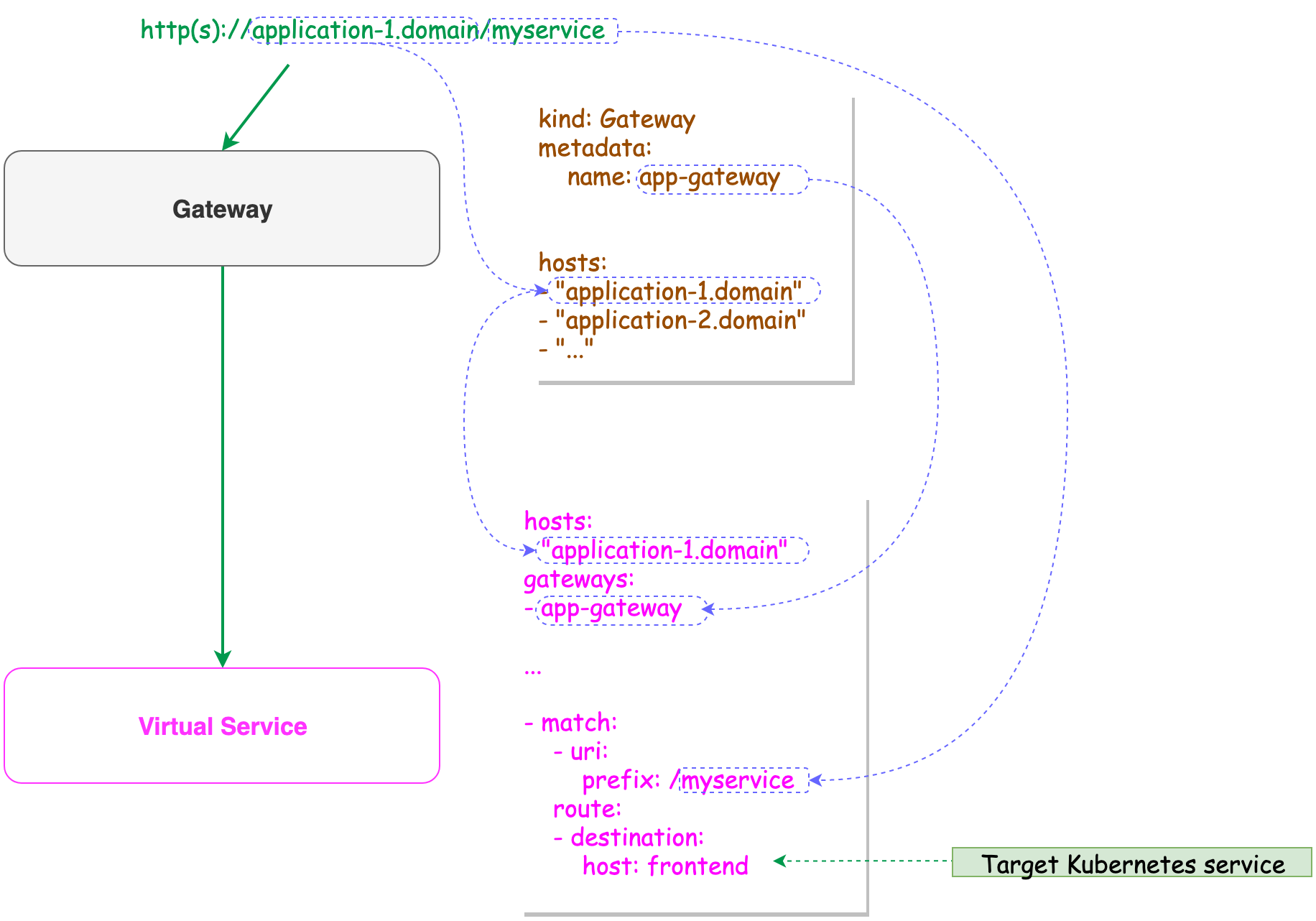

Istio virtual service / gateway

在這次的環境中,每個 namespace 都需要有對應的 virtual service / gateway,單有 vs 的話會沒辦法用。我想原因應該是 istio-system namespace 下沒有 cluster level 的 gateway 吧~

1

2

|

kubectl get gateways.networking.istio.io

No resources found in istio-system namespace.

|

virtual service 內可以關聯使用哪個 gateway 作為上游,gateway 則是要指定上游 ingress gateway。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: k8s-dashboard

namespace: kubernetes-dashboard

spec:

hosts:

- "k8s-dashboard.example.com"

gateways:

- k8s-dashboard-gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

port:

number: 443

host: kubernetes-dashboard

|

spec.gateways 指定上游 gatewayhosts 設定 domain nameport number 與 host 設定目標 service name,port

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: k8s-dashboard-gateway

namespace: kubernetes-dashboard

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- '*'

|

spec.selector 透過 label 指定上游 ingress gateway,istio 的 CRD 會去所有 namespace 下找servers 設定符合 tcp/80 HTTP protocol 的任意 domain 才轉發給與它關聯的 virtual service 處理

關於 gateway 與 vitual service 的關係可以參考

圖片來源: Ingress Gateway :: Istio Service Mesh Workshop

Istio Traffic 怎麼跑的?

我們環境當中有安裝 aws-load-balancer-controller,當我們建立 Ingress resource 時會自動向 AWS 要一個 ELB

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-taiwan-1:12345678900:certificate/529e0011-bts00-5566-7788-a9

alb.ingress.kubernetes.io/healthcheck-path: /healthz/ready

alb.ingress.kubernetes.io/healthcheck-port: "31222"

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/load-balancer-attributes: routing.http2.enabled=false

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-redirect: "443"

alb.ingress.kubernetes.io/subnets: subnet-12345a,subnet-12345b

alb.ingress.kubernetes.io/success-codes: 200,302

alb.ingress.kubernetes.io/target-type: instance

...

kubernetes.io/ingress.class: alb

generation: 1

labels:

app: ingress

name: ingress

namespace: istio-system

...

spec:

rules:

- http:

paths:

- backend:

service:

name: istio-ingressgateway

port:

number: 80

path: /*

|

annotations 設定是給 AWS ELB 吃的spec.rule 設定任意 domain, path 全部導流到 istio-ingressgateway 這個 service 內,後端是 deployment/istio-ingressgateway,流量正式進入 Istio service mesh network- 使用

kubectl describe 會比較好看一點

1

2

3

4

5

|

Rules:

Host Path Backends

---- ---- --------

*

/* istio-ingressgateway:80

|

對於 Istio service mesh (sidecar) 如何劫馬車當縣長 ,請參考 理解 Istio Service Mesh 中 Envoy 代理 Sidecar 注入及流量劫持 · Jimmy Song

產生 read-only token

- 多個 RoleBinding/ClusterRoleBinding 可以附身到同一個

serviceaccount

ClusterRole 是為了要讓 dashboard UI 選單中有東西可以選,使用上會比較方便。serviceaccount 為了方便管理,統一放在 kubernetes-dashboard 下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

apiVersion: v1

kind: ServiceAccount

metadata:

name: rd-read-only

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kubernetes-dashboard-read-only

namespace: develop

rules:

- apiGroups: ["*"]

resources: ["deployments","replicasets","pods"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-read-only

namespace: develop

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-read-only

subjects:

- kind: ServiceAccount

name: rd-read-only

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kubernetes-dashboard-get-namespace

rules:

- apiGroups: ["*"]

resources: ["namespaces"]

verbs: ["get", "list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-get-namespace

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard-get-namespace

subjects:

- kind: ServiceAccount

name: rd-read-only

namespace: kubernetes-dashboard

|

參考資料